Machines can’t understand language like we do. They need numerical text representation to process text well. This is a big challenge for artificial intelligence today.

Old methods like one-hot encoding make vectors that are too sparse and not efficient. They can’t really show how words relate to each other. This makes them not very good for complex tasks.

Word embeddings change this by making numerical text representation that’s dense and full of meaning. These vectors keep the context and meaning of words. This lets machine learning algorithms understand language better.

This new way is key for advanced natural language processing. It helps with things like understanding how people feel in text and translating languages. It’s a big step towards making computers understand us better.

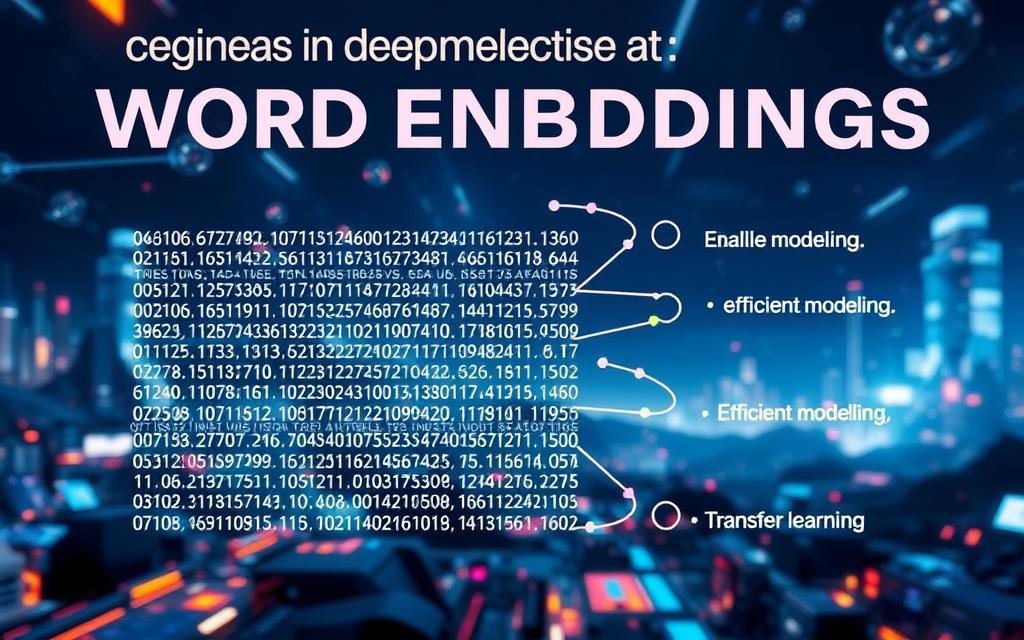

Defining Word Embeddings and Their Significance

Word embeddings are a big step forward in how computers understand language. They turn words into numbers that show their meaning and how they relate to each other. This lets machines grasp language in ways they couldn’t before.

The Necessity of Numerical Text Representation

Computers only work with numbers, so turning text into numbers is key. Old methods like one-hot encoding used a lot of space and didn’t show word connections well. They had big problems:

- They needed a lot of space for big vocabularies

- They didn’t show how words relate to each other

- They used a lot of memory and were slow

Now, word embeddings use dense, lower-dimensional numbers. This lets machines see word patterns and connections. This is the base for today’s language processing systems.

Evolution from Traditional Methods

The idea of word embeddings started with linguist J.R. Firth in the 1950s. He said words are defined by who they hang out with. This idea led to early computer methods like:

- Vector space models from information retrieval

- Latent semantic analysis for document similarity

- Co-occurrence matrix representations

These old methods found some word connections but missed the deeper meaning. Word2Vec changed this by using neural networks to learn word meanings from lots of text. This was a big leap from simple counting to understanding words in context.

Today’s word embeddings keep getting better. They now understand more complex language features, like different meanings of words. This journey from simple numbers to deep language understanding has changed how machines talk to us.

How Word Embeddings Function: Core Mechanisms

Word embeddings show how machines learn language patterns. They turn words into numbers that keep their meanings. This helps machines understand language better.

Capturing Semantic Relationships

Word embeddings are great at finding connections between words. They use the idea that words in similar situations mean similar things.

They use math to show how words are related. For example, “king – man + woman = queen” shows how words are connected. Words that mean similar things are placed close together.

They also use distance to show how strong a word connection is. Cosine similarity shows how closely two words are related by looking at their angles.

Key Architectural Approaches

Today’s word embedding systems use advanced neural networks. These networks learn from huge amounts of text to find the best word representations.

They learn by guessing words based on their context. This way, they don’t need labelled data to learn.

The Word2Vec framework is a key example. It has two main ways to learn:

- Skip-gram model guesses context words from a target word

- Continuous Bag of Words (CBOW) guesses target words from context

Both use neural networks to find the best word embeddings. The hidden layer weights become the word embeddings after training.

This way, machines can understand word relationships without being told. They learn from lots of text data.

what is word embedding in deep learning: Techniques and Models

Modern natural language processing uses advanced embedding techniques. These turn text into numbers that mean something. The models have grown a lot, helping us understand words and their meanings better.

Word2Vec: Skip-gram and CBOW Implementations

Word2Vec changed the game with two smart neural network designs. The Continuous Bag of Words (CBOW) model guesses words based on what’s around them. It’s great for common words and trains quickly.

The Skip-gram model does the opposite, guessing the context from a target word. It’s better at rare words and fine details. Both models make vectors that keep word meanings and relationships intact.

These steps were a big leap forward. They gave us word embeddings that are more useful for NLP tasks.

Contextual Models: BERT and Beyond

Old methods struggled with words having different meanings. BERT and similar models tackle this by making word meanings change based on the sentence.

BERT looks at text from both sides, understanding the context. This lets it grasp word meanings based on how they’re used in sentences.

BERT’s way of understanding words has changed NLP. Today’s systems use BERT as a base, making even better language tools.

These improvements keep making language processing more accurate and deep.

Practical Implementation in Deep Learning Systems

Implementing word embeddings needs careful thought about model choice and how they fit into systems. These choices affect how well systems work and how fast they run.

Selecting Appropriate Embedding Models

Choosing the right model is key. Think about your dataset size, the task’s complexity, and your computer’s power.

For smaller datasets or everyday tasks, Word2Vec or GloVe are great. They have pre-trained vectors that catch common meanings well.

For tasks that need to understand context or bigger datasets, BERT is better. It changes word meanings based on what’s around them.

Integration with Neural Network Architectures

Word embeddings are the first layer for many neural networks. They turn text into numbers that networks can understand.

RNNs and LSTMs do well with embedding layers. They’re great at handling text in order.

Transformers have changed how we use embeddings. Their self-attention works well with contextual embeddings for complex NLP tasks.

Good integration lets neural networks learn from text well. This helps with everything from search engines to checking content.

Real-World Applications of Word Embeddings

Word embeddings have become key in many areas, from business to tech. They help machines understand language better. This makes digital experiences more advanced.

Enhancing Search and Recommendation Engines

Today’s search engines use word embeddings to get what you really mean. For example, searching for “comfortable walking shoes” shows related words like “cushioned” and “supportive”. This gives you better results.

Recommendation systems also get a boost from word embeddings. Streaming services use them to suggest movies or music based on themes. E-commerce sites recommend products that share similar features, not just categories.

The table below shows how embeddings change recommendations:

| Platform Type | Traditional Approach | Embedding-Enhanced Approach | Improvement Metrics |

|---|---|---|---|

| E-commerce | Category-based matching | Semantic feature similarity | +32% click-through rate |

| Content Streaming | Genre matching | Thematic relationship analysis | +45% user engagement |

| News Aggregation | Keyword frequency | Contextual topic modelling | +28% time spent reading |

Supporting Conversational AI Systems

Conversational AI systems use word embeddings to understand what you mean. This lets virtual assistants handle different ways of asking the same thing. They get the real meaning behind your questions.

For example, asking a chatbot about “account balance” in different ways works because of embeddings. This makes conversations feel more natural.

Advanced systems keep track of conversations. They remember what was said before. This helps them give answers that make sense in the context of the conversation. For more details, check out our analysis of top chatbots.

These technologies also help in content moderation. They can spot harmful content that simple filters miss. This is because they understand language in a deeper way.

Advantages and Challenges of Word Embeddings

Word embeddings have changed how machines understand language. They offer great benefits but also face big challenges. This look at word embeddings shows their power and the hurdles they need to overcome.

Benefits for NLP Tasks

Word embeddings greatly improve natural language processing tasks. They help machines grasp the meaning behind words, not just their surface level.

One key embedding advantage is reducing dimensionality. Traditional methods use high-dimensional, sparse vectors that are hard to work with. Embeddings shrink this into dense, lower-dimensional spaces, keeping important relationships intact.

These models are great at generalising. They can understand new words by looking at their context. This is super useful for tasks like sentiment analysis and text classification.

“Word embeddings have fundamentally changed how computers understand human language, moving from syntactic pattern matching to semantic comprehension.”

More benefits include:

- Improved performance on NLP tasks

- Better handling of word meanings

- Less data sparsity in text processing

- Enhanced transfer learning across domains

Addressing Limitations and Biases

Word embeddings have many challenges. Polysemy, where words have different meanings, is a big one.

Contextual models like BERT have helped by creating dynamic representations. But, they struggle with out-of-vocabulary words, which is a big problem.

The biggest issue is bias. Embeddings learn from text and can pick up and amplify prejudices:

| Bias Type | Example | Mitigation Strategy |

|---|---|---|

| Gender Bias | “doctor” associated with male pronouns | Debiasing algorithms |

| Racial Bias | Ethnic names linked to negative adjectives | Diverse training data |

| Cultural Bias | Western perspectives dominating meanings | Multilingual corpora |

Researchers are working on fixing these ethical issues. They use debiasing, data augmentation, and careful data selection. These methods help reduce bias while keeping the benefits of embeddings.

The field is moving towards more fair and robust embeddings. Ongoing research aims to create models that handle bias well while keeping the embedding advantages that make them useful for NLP.

Future Directions in Embedding Technologies

Innovation in embedding technologies is pushing beyond old ways of showing text. Researchers and developers are looking into new future trends. These trends aim to make machines better at understanding and processing language.

Subword embeddings are a big step forward. FastText breaks down words into smaller parts, capturing details missed by old methods. This is great for rare words and languages with lots of changes in words.

Multi-modal embedding models are also getting a lot of attention. These models learn from different types of data, like text, images, and sound. They help with tasks like describing images and finding similar items across different types of data.

Dynamic contextual embeddings are evolving. Future versions might be more efficient, thanks to new techniques. This could make advanced embeddings work better in real-time.

Reducing bias in embeddings is a big focus. New methods, like adversarial debiasing, are being explored. The goal is to make embeddings fairer without losing their usefulness.

Scalability is also a key area. Researchers are working on making training faster and more efficient. This could help make high-quality embeddings available even when resources are limited.

The table below compares emerging embedding approaches and their impacts:

| Technique | Key Innovation | Potential Impact | Adoption Challenge |

|---|---|---|---|

| Subword Embeddings | Character-level analysis | Better handling of rare words | Increased computational requirements |

| Multi-modal Models | Cross-data type integration | Richer contextual understanding | Data alignment complexities |

| Dynamic Contextualisation | Adaptive representation | More nuanced language understanding | Model complexity management |

| Bias Mitigation | Fairness constraints | More equitable AI systems | Performance trade-off balancing |

Real-time processing is another area being worked on. Future systems might be able to generate embeddings quickly. This could change how we use AI in real-time.

The study of these future trends shows how embedding technologies are growing. As they get better, they will be more advanced, efficient, and fair. These improvements will shape how AI systems understand and interact with human language.

Conclusion

Word embeddings have changed how machines get language. They turn text into numbers, showing the meaning and how words relate. This is key for better natural language processing in many areas.

These embeddings are vital in many smart systems. They help models like Word2Vec and BERT work well. They are a big part of today’s AI.

Embeddings are very important for AI. They help computers understand language better. They keep getting better, helping AI grow.

It’s important to keep working on embedding methods. As AI gets smarter, these tools will help even more. They are key to making systems that understand us better.