Artificial intelligence (AI) technologies are transforming industries, from image recognition to virtual assistants. These advancements rely heavily on machine learning techniques, which include both neural networks and deep learning. While these terms are often used interchangeably, they represent distinct concepts with unique applications.

One of the key differences lies in their architecture. Traditional neural networks typically have fewer layers, making them simpler but less capable of handling complex tasks. In contrast, deep learning systems use multiple layers to process vast amounts of data, enabling more sophisticated analysis and decision-making.

Understanding these distinctions is crucial for businesses adopting AI solutions. By leveraging the right technology, organizations can unlock new opportunities and stay competitive in today’s fast-paced digital landscape.

Introduction to Deep Learning and Neural Networks

AI-driven technologies are redefining industries by enabling smarter decision-making. At the core of these advancements lies artificial intelligence, a broad field focused on creating systems that mimic human intelligence. Within AI, machine learning plays a pivotal role, using algorithms to recognize patterns and make predictions.

One of the most powerful tools in machine learning is the neural network. Inspired by the structure of biological brains, neural networks consist of interconnected nodes that process information. These models are designed to handle complex tasks, such as image recognition and language translation.

Building on this foundation, deep learning systems take neural networks to the next level. By using multiple layers, these systems can analyze vast amounts of data with greater accuracy. While all deep learning relies on neural networks, not all neural networks qualify as deep learning.

Understanding these concepts is essential for leveraging AI effectively. Here’s a quick breakdown:

- Artificial Intelligence: The overarching system enabling human-like decision-making.

- Machine Learning: A subset of AI focused on pattern recognition and prediction.

- Neural Networks: Node-based systems inspired by biological brains.

- Deep Learning: An advanced form of machine learning using multi-layered networks.

Understanding the Architecture: Deep Learning vs. Traditional Neural Networks

The architecture of AI systems plays a pivotal role in their functionality and efficiency. By examining the structural differences between traditional neural networks and deep learning models, we can better understand their capabilities and limitations.

Traditional Neural Network Architecture

Traditional neural networks, such as feedforward networks, are relatively simple in design. They typically consist of a single hidden layer, which processes data in a unidirectional flow. This structure limits their ability to handle complex tasks but makes them efficient for basic pattern recognition.

These networks rely on straightforward learning algorithms to adjust weights and biases. While effective for smaller datasets, their single-layer architecture restricts their capacity to learn intricate features.

Deep Learning Architecture

In contrast, deep learning systems use multiple layers to process data, enabling more sophisticated analysis. Convolutional Neural Networks (CNNs), for example, consist of three specialized layer types: convolutional, pooling, and fully connected layers. This architecture is particularly effective for image processing tasks.

Recurrent Neural Networks (RNNs) introduce directed cycles, allowing them to retain memory and process temporal data sequences. This makes them ideal for applications like speech recognition and language modeling.

The additional layers in deep neural networks enable feature hierarchy learning, where each layer extracts increasingly complex features. This hierarchical structure enhances their ability to handle diverse inputs, such as variable image sizes in CNNs.

By leveraging these advanced architectures, deep learning models achieve higher accuracy and flexibility, making them indispensable in modern AI applications.

Complexity and Computational Demands

The complexity of AI systems varies significantly based on their architecture and design. Traditional neural networks and deep learning models differ in their structure, which directly impacts their computational demands and resource requirements.

Parameters and Layers in Traditional Neural Networks

Traditional neural networks have a relatively low number of parameters, such as weights and biases. These systems typically use fewer layers, making them simpler and less resource-intensive. However, this simplicity limits their ability to handle complex tasks.

For example, a basic feedforward network might process data in a single hidden layer. While efficient for smaller datasets, this architecture struggles with intricate patterns or large-scale data processing.

Deep Learning Systems: A Deeper Dive into Complexity

In contrast, deep learning algorithms use multiple layers to process data, significantly increasing their complexity. These systems often include specialized layers, such as convolutional or recurrent layers, to handle specific tasks like image recognition or language modeling.

Deep learning models require a higher number of parameters, which enhances their accuracy but also increases their computational demands. For instance, autoencoders in deep learning perform dual functions like data compression and anomaly detection, adding to their sophistication.

Here’s a quick comparison of resource requirements:

- Traditional Neural Networks: Fewer parameters, lower computational power, and smaller datasets.

- Deep Learning Systems: More parameters, higher computational power, and larger datasets.

Training deep learning models demands robust hardware, such as GPUs with high core counts and large RAM. Additionally, energy consumption becomes a challenge in large-scale implementations. For more insights, explore the differences between deep learning and neural.

Training Processes: Speed and Efficiency

The efficiency of AI systems often hinges on their training processes. How models are trained directly impacts their speed, accuracy, and ability to handle complex tasks. Understanding these methods is essential for optimizing performance.

Training Traditional Neural Networks

Traditional systems rely on simpler learning algorithms, making their training faster but less adaptable to intricate patterns. These models typically use supervised learning, requiring labeled datasets to adjust weights and biases.

For example, a basic feedforward network processes data in a single hidden layer. While efficient for smaller datasets, this approach struggles with large-scale or complex data.

Training Advanced Models

In contrast, advanced systems use multiple layers and sophisticated techniques like backpropagation. This method adjusts weights by attributing errors, enabling more accurate predictions. These models often require semi-supervised learning, allowing them to process raw data with minimal labeling.

However, this complexity increases time and resources needed for training. Batch size optimization and transfer learning are often employed to reduce these demands.

Key differences in training include:

- Traditional Systems: Faster training, limited complexity handling, and reliance on labeled datasets.

- Advanced Models: Slower training, higher accuracy, and ability to process raw data.

By leveraging the right learning algorithms, organizations can balance speed and efficiency in their AI systems.

Performance and Capabilities

The effectiveness of AI systems is often measured by their ability to handle diverse tasks with precision. Whether it’s recognizing patterns in data or achieving high accuracy in complex applications, the performance of these systems varies significantly based on their design.

Performance of Traditional Neural Networks

Traditional systems excel in simpler tasks, such as basic classification or pattern recognition. For example, feedforward networks achieve an accuracy of 70-85% on the MNIST dataset. However, their single-layer architecture limits their ability to process more complex data.

These systems often face challenges like the vanishing gradient problem, where errors diminish as they propagate through layers. This issue restricts their learning capacity, making them less effective for advanced applications.

Performance of Advanced Systems

In contrast, advanced systems demonstrate superior performance in handling intricate tasks. For instance, deep learning models achieve over 99% accuracy on the MNIST dataset. They also excel in natural language processing, with models like BERT scoring 93.5% on the GLUE benchmark.

These systems use multiple layers to extract complex features, enabling better contextual understanding in tasks like image recognition and language modeling. However, their complexity increases the risk of overfitting, requiring careful optimization during training.

Key differences in performance include:

- Traditional Systems: Efficient for simple tasks but limited in handling complex data.

- Advanced Models: Higher accuracy and adaptability for diverse applications.

By understanding these distinctions, organizations can choose the right system for their specific needs, ensuring optimal performance and efficiency.

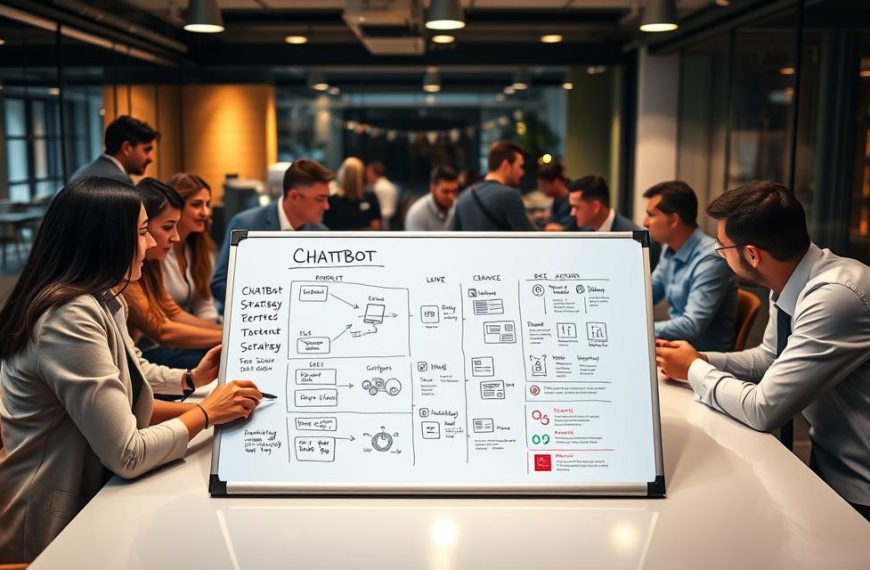

Applications in Real-World Scenarios

From fraud detection to self-driving cars, AI technologies are reshaping industries with their diverse applications. Both traditional neural networks and advanced models play critical roles in solving real-world problems, but their capabilities vary significantly.

Applications of Traditional Neural Networks

Traditional systems excel in simpler tasks like fraud detection and basic optical character recognition (OCR). For instance, credit scoring systems rely on these algorithms to assess risk and make lending decisions. Their straightforward architecture makes them efficient for tasks requiring less complexity.

Another example is OCR technology, which converts scanned documents into editable text. While effective, these systems often struggle with handwriting recognition or complex layouts, highlighting their limitations.

Applications of Advanced Models

In contrast, advanced systems like Tesla Autopilot and GPT-4 demonstrate the power of deep learning. Tesla’s self-driving cars use convolutional neural networks (CNNs) to process real-time image data, enabling safe navigation. Similarly, GPT-4 excels in natural language processing, generating human-like text for various applications.

Medical imaging diagnostics is another area where deep learning shines. These models analyze complex data, such as MRI scans, to detect diseases with remarkable accuracy. Additionally, generative AI tools like DALL-E create stunning visuals, showcasing the versatility of these systems.

However, the ethical implications of generative AI, such as misinformation or bias, require careful consideration. As these technologies evolve, addressing these challenges becomes essential for responsible innovation.

Conclusion: What Sets Deep Learning Apart from Traditional Neural Networks?

The evolution of AI technologies has brought significant advancements in processing and analyzing complex data. One of the primary differences lies in their architecture. Traditional systems use fewer layers, limiting their ability to handle intricate tasks. In contrast, deep learning models leverage multiple layers, enabling superior performance in unstructured data processing.

For businesses, adopting these technologies requires a cost/benefit analysis. While deep learning offers higher accuracy, it demands more computational resources. However, its ability to analyze vast datasets makes it invaluable for modern applications like image recognition and natural language processing.

Looking ahead, the convergence of neural networks with neuromorphic computing promises even greater efficiency. Enterprises scaling AI should focus on integrating these advanced models strategically, ensuring they align with their operational goals and resource capabilities.

FAQ

How does the architecture of deep learning differ from traditional neural networks?

Deep learning systems use multiple hidden layers, enabling them to process complex patterns in data. Traditional neural networks typically have fewer layers, limiting their ability to handle intricate tasks like image recognition or natural language processing.

What makes deep learning more computationally demanding?

Deep learning models require significant computational power and resources due to their complex structure and the large amounts of data they process. Traditional neural networks are less resource-intensive but also less capable of handling advanced tasks.

How does training differ between deep learning and traditional neural networks?

Training deep learning models involves processing vast datasets and optimizing numerous parameters, which can be time-consuming. Traditional neural networks train faster but may not achieve the same level of accuracy or performance in complex applications.

What are the key performance differences between these systems?

Deep learning excels in tasks like image and speech recognition, offering higher accuracy and adaptability. Traditional neural networks perform well in simpler tasks but struggle with more advanced applications requiring intricate pattern recognition.

Where are deep learning models commonly applied?

Deep learning is widely used in fields like healthcare, autonomous vehicles, and natural language processing. Traditional neural networks are often applied in simpler tasks such as basic classification or regression problems.

Why is deep learning considered more powerful for artificial intelligence?

Deep learning’s ability to process large amounts of data and extract complex features makes it a cornerstone of modern artificial intelligence. Its multi-layered architecture mimics the human brain more closely, enabling it to tackle sophisticated tasks effectively.